본문

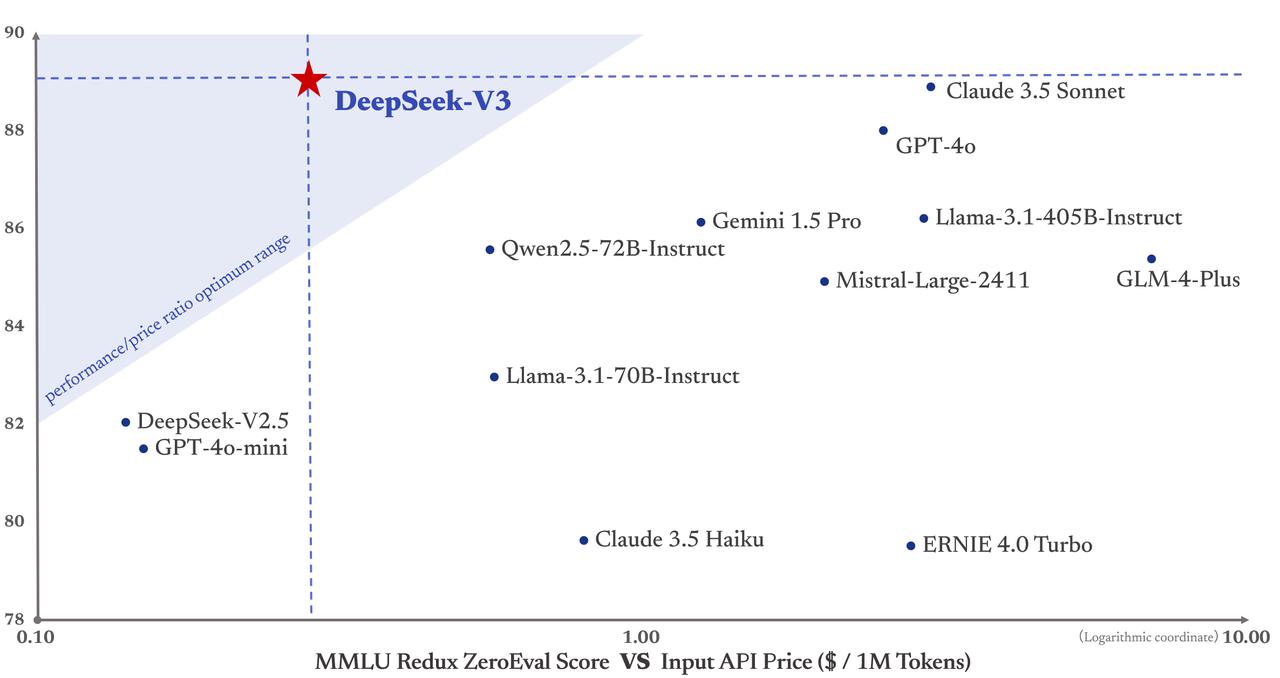

By selling collaboration and information sharing, DeepSeek empowers a wider group to participate in AI development, thereby accelerating progress in the field. This makes highly effective AI accessible to a wider range of users and devices. Here’s the best part - GroqCloud is free for many users. DeepSeek's AI Assistant, powered by DeepSeek-V3, has overtaken rival ChatGPT to turn out to be the highest-rated free software available on Apple's App Store in the United States. DeepSeek is ideal for companies that require complicated information analytics and predictive insights, while ChatGPT excels at automating communication and producing content material. This combination permits DeepSeek-V2.5 to cater to a broader viewers whereas delivering enhanced performance across varied use cases. While the reported $5.5 million figure represents a portion of the full coaching value, it highlights DeepSeek’s ability to realize excessive efficiency with significantly less monetary funding. This transfer underscores DeepSeek’s capability to disrupt well-established markets and affect overall pricing dynamics. The flexibility to use only a few of the entire parameters of an LLM and shut off the remaining is an instance of sparsity. The main advance most individuals have identified in DeepSeek is that it might turn large sections of neural network "weights" or "parameters" on and off.

By selling collaboration and information sharing, DeepSeek empowers a wider group to participate in AI development, thereby accelerating progress in the field. This makes highly effective AI accessible to a wider range of users and devices. Here’s the best part - GroqCloud is free for many users. DeepSeek's AI Assistant, powered by DeepSeek-V3, has overtaken rival ChatGPT to turn out to be the highest-rated free software available on Apple's App Store in the United States. DeepSeek is ideal for companies that require complicated information analytics and predictive insights, while ChatGPT excels at automating communication and producing content material. This combination permits DeepSeek-V2.5 to cater to a broader viewers whereas delivering enhanced performance across varied use cases. While the reported $5.5 million figure represents a portion of the full coaching value, it highlights DeepSeek’s ability to realize excessive efficiency with significantly less monetary funding. This transfer underscores DeepSeek’s capability to disrupt well-established markets and affect overall pricing dynamics. The flexibility to use only a few of the entire parameters of an LLM and shut off the remaining is an instance of sparsity. The main advance most individuals have identified in DeepSeek is that it might turn large sections of neural network "weights" or "parameters" on and off.

Abnar and the team ask whether or not there's an "optimal" level for sparsity in Deepseek free and similar fashions: for a given quantity of computing power, is there an optimal variety of these neural weights to turn on or off? I’ll go over every of them with you and given you the professionals and cons of each, then I’ll present you the way I set up all three of them in my Open WebUI instance! This requires ongoing innovation and a focus on unique capabilities that set DeepSeek other than other firms in the sector. Even if the docs say The entire frameworks we suggest are open source with lively communities for help, and could be deployed to your personal server or a internet hosting provider , it fails to say that the hosting or server requires nodejs to be working for this to work. Therefore, the developments of outdoors firms reminiscent of DeepSeek are broadly part of Apple's continued involvement in AI analysis. The AI arms race between massive tech companies had sidelined smaller AI labs such as Cohere and Mistral. Apple has no connection to DeepSeek, but the tech giant does its personal AI research.

By making the sources overtly available, Hugging Face aims to democratize entry to superior AI mannequin development techniques and encouraging community collaboration in AI analysis. Nvidia competitor Intel has identified sparsity as a key avenue of analysis to change the cutting-edge in the sphere for a few years. AI sector and to showcase China’s burgeoning capabilities in the field. DeepSeek employs distillation methods to switch the information and capabilities of bigger fashions into smaller, more efficient ones. It’s like a teacher transferring their knowledge to a scholar, allowing the student to perform tasks with similar proficiency but with less experience or sources. Experience state-of-the-art artificial intelligence expertise for your online business needs. The introduction of Apple Intelligence was a transparent sign that the Cupertino large is now absolutely … However, in 2023, he launched DeepSeek with an intention of engaged on Artificial General Intelligence. These costs are usually not necessarily all borne immediately by DeepSeek, i.e. they could possibly be working with a cloud supplier, however their price on compute alone (earlier than anything like electricity) is a minimum of $100M’s per 12 months. Open Weight Models are Unsafe and Nothing Can Fix This. In the paper, titled "Parameters vs FLOPs: Scaling Laws for Optimal Sparsity for Mixture-of-Experts Language Models", posted on the arXiv pre-print server, lead writer Samir Abnar and different Apple researchers, along with collaborator Harshay Shah of MIT, studied how performance diverse as they exploited sparsity by turning off parts of the neural net.

By making the sources overtly available, Hugging Face aims to democratize entry to superior AI mannequin development techniques and encouraging community collaboration in AI analysis. Nvidia competitor Intel has identified sparsity as a key avenue of analysis to change the cutting-edge in the sphere for a few years. AI sector and to showcase China’s burgeoning capabilities in the field. DeepSeek employs distillation methods to switch the information and capabilities of bigger fashions into smaller, more efficient ones. It’s like a teacher transferring their knowledge to a scholar, allowing the student to perform tasks with similar proficiency but with less experience or sources. Experience state-of-the-art artificial intelligence expertise for your online business needs. The introduction of Apple Intelligence was a transparent sign that the Cupertino large is now absolutely … However, in 2023, he launched DeepSeek with an intention of engaged on Artificial General Intelligence. These costs are usually not necessarily all borne immediately by DeepSeek, i.e. they could possibly be working with a cloud supplier, however their price on compute alone (earlier than anything like electricity) is a minimum of $100M’s per 12 months. Open Weight Models are Unsafe and Nothing Can Fix This. In the paper, titled "Parameters vs FLOPs: Scaling Laws for Optimal Sparsity for Mixture-of-Experts Language Models", posted on the arXiv pre-print server, lead writer Samir Abnar and different Apple researchers, along with collaborator Harshay Shah of MIT, studied how performance diverse as they exploited sparsity by turning off parts of the neural net.

As you flip up your computing power, the accuracy of the AI model improves, Abnar and the team discovered. AI researchers have proven for a few years that eliminating elements of a neural internet could achieve comparable or even better accuracy with less effort. Approaches from startups based mostly on sparsity have additionally notched high scores on trade benchmarks in recent times. Sparsity additionally works in the other route: it can make increasingly environment friendly AI computer systems. By prioritizing the event of distinctive options and staying agile in response to market traits, DeepSeek can maintain its competitive edge and navigate the challenges of a quickly evolving industry. So much can go wrong even for such a easy example. We had a variety of stuff teed up. Beyond closed-supply fashions, open-supply models, together with DeepSeek sequence (DeepSeek-AI, 2024b, c; Guo et al., 2024; DeepSeek-AI, 2024a), LLaMA collection (Touvron et al., 2023a, b; AI@Meta, 2024a, b), Qwen sequence (Qwen, 2023, 2024a, 2024b), and Mistral collection (Jiang et al., 2023; Mistral, 2024), are also making significant strides, endeavoring to shut the gap with their closed-source counterparts. ExLlama is suitable with Llama and Mistral fashions in 4-bit. Please see the Provided Files desk above for per-file compatibility. DeepSeek’s fashions are topic to censorship to forestall criticism of the Chinese Communist Party, which poses a big problem to its world adoption.

If you treasured this article so you would like to collect more info concerning DeepSeek v3 kindly visit our own website.

댓글목록

등록된 댓글이 없습니다.